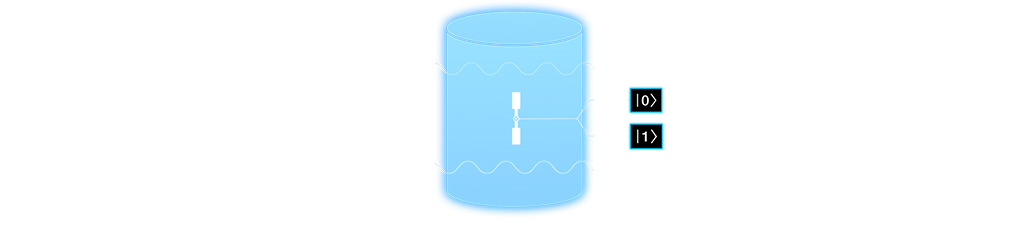

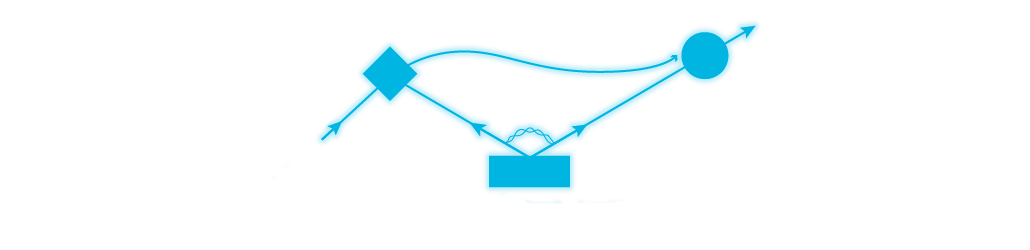

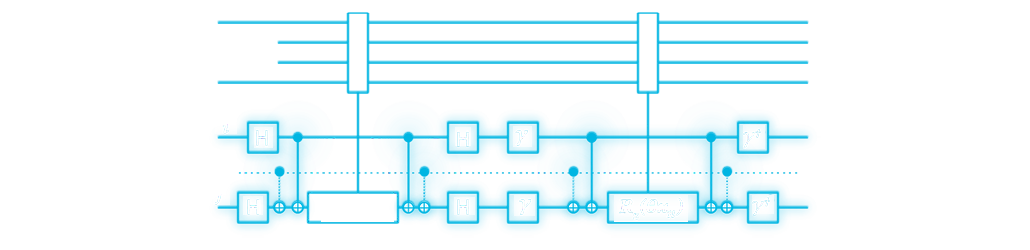

Quantum Information Science, or QIS, exploits quantum properties (such as coherence, superposition, entanglement and squeezing) and combines them with elements of information science to acquire, communicate and process information beyond what classical approaches can achieve.

The Fermilab Quantum Institute, or FQI, leverages existing Fermilab expertise and infrastructure and partners with leading QIS researchers to pursue high-impact QIS research and development while advancing high-energy physics applications. In this pursuit, FQI takes advantage of new quantum capabilities and builds the capacity necessary for the applications’ deployment. In support of the Fermilab science program and HEP science objectives, FQI engages with QIS initiatives across the entire DOE Office of Science.

QIS applications

Leadership